import numpy as np

import pandas as pd

object creation

s=pd.Series([1,3,5,np.nan,6,8])

s

0 1.0

1 3.0

2 5.0

3 NaN

4 6.0

5 8.0

dtype: float64

dates=pd.date_range("20130101",periods=6)

dates

DatetimeIndex(['2013-01-01', '2013-01-02', '2013-01-03', '2013-01-04',

'2013-01-05', '2013-01-06'],

dtype='datetime64[ns]', freq='D')

df= pd.DataFrame(np.random.randn(6,4),index=dates,columns=list("ABCD"))

df

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-01 | -1.171673 | -1.282048 | -1.240960 | -1.382591 |

| 2013-01-02 | 0.747014 | -0.098745 | -2.674977 | -0.766007 |

| 2013-01-03 | 0.344874 | -1.071516 | 0.138593 | 0.563455 |

| 2013-01-04 | 0.032506 | 0.100209 | -0.258473 | -1.114725 |

| 2013-01-05 | 0.141728 | 2.327885 | 1.172082 | 1.085766 |

| 2013-01-06 | -1.335232 | -1.043389 | 2.569405 | 1.850168 |

df2= pd.DataFrame({"A":1.0,"B":pd.Timestamp("20130102"),

"C":pd.Series(1,index=list(range(4)),dtype="float32"),

"D":np.array([3]*4,dtype="int32"),

"E":pd.Categorical(["test","train","test","train"]),

"F":"foo",})

df2

| A | B | C | D | E | F | |

|---|---|---|---|---|---|---|

| 0 | 1.0 | 2013-01-02 | 1.0 | 3 | test | foo |

| 1 | 1.0 | 2013-01-02 | 1.0 | 3 | train | foo |

| 2 | 1.0 | 2013-01-02 | 1.0 | 3 | test | foo |

| 3 | 1.0 | 2013-01-02 | 1.0 | 3 | train | foo |

df2.dtypes

A float64

B datetime64[ns]

C float32

D int32

E category

F object

dtype: object

df.head()

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-01 | -1.171673 | -1.282048 | -1.240960 | -1.382591 |

| 2013-01-02 | 0.747014 | -0.098745 | -2.674977 | -0.766007 |

| 2013-01-03 | 0.344874 | -1.071516 | 0.138593 | 0.563455 |

| 2013-01-04 | 0.032506 | 0.100209 | -0.258473 | -1.114725 |

| 2013-01-05 | 0.141728 | 2.327885 | 1.172082 | 1.085766 |

df.tail()

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-02 | 0.747014 | -0.098745 | -2.674977 | -0.766007 |

| 2013-01-03 | 0.344874 | -1.071516 | 0.138593 | 0.563455 |

| 2013-01-04 | 0.032506 | 0.100209 | -0.258473 | -1.114725 |

| 2013-01-05 | 0.141728 | 2.327885 | 1.172082 | 1.085766 |

| 2013-01-06 | -1.335232 | -1.043389 | 2.569405 | 1.850168 |

df.index

DatetimeIndex(['2013-01-01', '2013-01-02', '2013-01-03', '2013-01-04',

'2013-01-05', '2013-01-06'],

dtype='datetime64[ns]', freq='D')

df.columns

Index(['A', 'B', 'C', 'D'], dtype='object')

df.to_numpy()

array([[ 1.59342018, 2.65239188, 0.49500638, -0.73513749],

[ 0.56375966, -2.66504831, 0.37451522, -1.10341922],

[-0.2827687 , 1.07071856, -0.50046244, -0.48868478],

[-1.66030333, -0.08691766, 0.55157115, -0.26135908],

[-0.40783401, -0.83482756, -1.80988001, -1.30816714],

[ 0.22261522, -1.87903334, 2.08030501, -0.16128424]])

df2.to_numpy()

array([[1.0, Timestamp('2013-01-02 00:00:00'), 1.0, 3, 'test', 'foo'],

[1.0, Timestamp('2013-01-02 00:00:00'), 1.0, 3, 'train', 'foo'],

[1.0, Timestamp('2013-01-02 00:00:00'), 1.0, 3, 'test', 'foo'],

[1.0, Timestamp('2013-01-02 00:00:00'), 1.0, 3, 'train', 'foo']],

dtype=object)

df.describe()

| A | B | C | D | |

|---|---|---|---|---|

| count | 6.000000 | 6.000000 | 6.000000 | 6.000000 |

| mean | 0.004815 | -0.290453 | 0.198509 | -0.676342 |

| std | 1.087118 | 1.950035 | 1.288944 | 0.459949 |

| min | -1.660303 | -2.665048 | -1.809880 | -1.308167 |

| 25% | -0.376568 | -1.617982 | -0.281718 | -1.011349 |

| 50% | -0.030077 | -0.460873 | 0.434761 | -0.611911 |

| 75% | 0.478474 | 0.781310 | 0.537430 | -0.318191 |

| max | 1.593420 | 2.652392 | 2.080305 | -0.161284 |

df.T

| 2013-01-01 | 2013-01-02 | 2013-01-03 | 2013-01-04 | 2013-01-05 | 2013-01-06 | |

|---|---|---|---|---|---|---|

| A | 1.593420 | 0.563760 | -0.282769 | -1.660303 | -0.407834 | 0.222615 |

| B | 2.652392 | -2.665048 | 1.070719 | -0.086918 | -0.834828 | -1.879033 |

| C | 0.495006 | 0.374515 | -0.500462 | 0.551571 | -1.809880 | 2.080305 |

| D | -0.735137 | -1.103419 | -0.488685 | -0.261359 | -1.308167 | -0.161284 |

df.sort_values(by='B')

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-02 | 0.563760 | -2.665048 | 0.374515 | -1.103419 |

| 2013-01-06 | 0.222615 | -1.879033 | 2.080305 | -0.161284 |

| 2013-01-05 | -0.407834 | -0.834828 | -1.809880 | -1.308167 |

| 2013-01-04 | -1.660303 | -0.086918 | 0.551571 | -0.261359 |

| 2013-01-03 | -0.282769 | 1.070719 | -0.500462 | -0.488685 |

| 2013-01-01 | 1.593420 | 2.652392 | 0.495006 | -0.735137 |

Selection

df['A'] #Getting

2013-01-01 1.593420

2013-01-02 0.563760

2013-01-03 -0.282769

2013-01-04 -1.660303

2013-01-05 -0.407834

2013-01-06 0.222615

Freq: D, Name: A, dtype: float64

df[0:3] #slice the rows

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-01 | 1.593420 | 2.652392 | 0.495006 | -0.735137 |

| 2013-01-02 | 0.563760 | -2.665048 | 0.374515 | -1.103419 |

| 2013-01-03 | -0.282769 | 1.070719 | -0.500462 | -0.488685 |

df["20130102":"20130104"]

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-02 | 0.563760 | -2.665048 | 0.374515 | -1.103419 |

| 2013-01-03 | -0.282769 | 1.070719 | -0.500462 | -0.488685 |

| 2013-01-04 | -1.660303 | -0.086918 | 0.551571 | -0.261359 |

df.loc[dates[0]]

A 1.593420

B 2.652392

C 0.495006

D -0.735137

Name: 2013-01-01 00:00:00, dtype: float64

df.loc[:,["A","B"]] #Selection by Label

| A | B | |

|---|---|---|

| 2013-01-01 | 1.593420 | 2.652392 |

| 2013-01-02 | 0.563760 | -2.665048 |

| 2013-01-03 | -0.282769 | 1.070719 |

| 2013-01-04 | -1.660303 | -0.086918 |

| 2013-01-05 | -0.407834 | -0.834828 |

| 2013-01-06 | 0.222615 | -1.879033 |

df.loc["20130102":"20130104",["A","B"]]

| A | B | |

|---|---|---|

| 2013-01-02 | 0.563760 | -2.665048 |

| 2013-01-03 | -0.282769 | 1.070719 |

| 2013-01-04 | -1.660303 | -0.086918 |

df.loc["20130102", ["A","B"]]

A 0.563760

B -2.665048

Name: 2013-01-02 00:00:00, dtype: float64

df.loc[dates[0],"A"]

1.5934201795971803

df.at[dates[0],"A"]

1.5934201795971803

Selection by position

df.iloc[3]

A -1.660303

B -0.086918

C 0.551571

D -0.261359

Name: 2013-01-04 00:00:00, dtype: float64

df.iloc[3:5,0:2]

| A | B | |

|---|---|---|

| 2013-01-04 | -1.660303 | -0.086918 |

| 2013-01-05 | -0.407834 | -0.834828 |

df.iloc[[1,2,4],[0,2]]

| A | C | |

|---|---|---|

| 2013-01-02 | 0.563760 | 0.374515 |

| 2013-01-03 | -0.282769 | -0.500462 |

| 2013-01-05 | -0.407834 | -1.809880 |

df.iloc[1:3,:]

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-02 | 0.563760 | -2.665048 | 0.374515 | -1.103419 |

| 2013-01-03 | -0.282769 | 1.070719 | -0.500462 | -0.488685 |

df.iloc[:,1:3]

| B | C | |

|---|---|---|

| 2013-01-01 | 2.652392 | 0.495006 |

| 2013-01-02 | -2.665048 | 0.374515 |

| 2013-01-03 | 1.070719 | -0.500462 |

| 2013-01-04 | -0.086918 | 0.551571 |

| 2013-01-05 | -0.834828 | -1.809880 |

| 2013-01-06 | -1.879033 | 2.080305 |

df.iloc[1,1]

-2.6650483125361175

df.iat[1,1] #equivalent to the prior method

-2.6650483125361175

Boolean indexing

df[df["A"]>0]

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-01 | 1.593420 | 2.652392 | 0.495006 | -0.735137 |

| 2013-01-02 | 0.563760 | -2.665048 | 0.374515 | -1.103419 |

| 2013-01-06 | 0.222615 | -1.879033 | 2.080305 | -0.161284 |

df[df>0]

| A | B | C | D | |

|---|---|---|---|---|

| 2013-01-01 | 1.593420 | 2.652392 | 0.495006 | NaN |

| 2013-01-02 | 0.563760 | NaN | 0.374515 | NaN |

| 2013-01-03 | NaN | 1.070719 | NaN | NaN |

| 2013-01-04 | NaN | NaN | 0.551571 | NaN |

| 2013-01-05 | NaN | NaN | NaN | NaN |

| 2013-01-06 | 0.222615 | NaN | 2.080305 | NaN |

df2=df.copy() #make same DataFrame

df2["E"]=["one","one","two","three","four","three"]

df2

| A | B | C | D | E | |

|---|---|---|---|---|---|

| 2013-01-01 | 1.593420 | 2.652392 | 0.495006 | -0.735137 | one |

| 2013-01-02 | 0.563760 | -2.665048 | 0.374515 | -1.103419 | one |

| 2013-01-03 | -0.282769 | 1.070719 | -0.500462 | -0.488685 | two |

| 2013-01-04 | -1.660303 | -0.086918 | 0.551571 | -0.261359 | three |

| 2013-01-05 | -0.407834 | -0.834828 | -1.809880 | -1.308167 | four |

| 2013-01-06 | 0.222615 | -1.879033 | 2.080305 | -0.161284 | three |

df2[df2["E"].isin(["two","four"])] #isin() method for filtering

| A | B | C | D | E | |

|---|---|---|---|---|---|

| 2013-01-03 | -0.282769 | 1.070719 | -0.500462 | -0.488685 | two |

| 2013-01-05 | -0.407834 | -0.834828 | -1.809880 | -1.308167 | four |

Setting

s1=pd.Series([1,2,3,4,5,6],index=pd.date_range("20130102",periods=6))

s1

2013-01-02 1

2013-01-03 2

2013-01-04 3

2013-01-05 4

2013-01-06 5

2013-01-07 6

Freq: D, dtype: int64

df['F']=s1

df

| A | B | C | D | F | |

|---|---|---|---|---|---|

| 2013-01-01 | -1.171673 | -1.282048 | -1.240960 | -1.382591 | NaN |

| 2013-01-02 | 0.747014 | -0.098745 | -2.674977 | -0.766007 | 1.0 |

| 2013-01-03 | 0.344874 | -1.071516 | 0.138593 | 0.563455 | 2.0 |

| 2013-01-04 | 0.032506 | 0.100209 | -0.258473 | -1.114725 | 3.0 |

| 2013-01-05 | 0.141728 | 2.327885 | 1.172082 | 1.085766 | 4.0 |

| 2013-01-06 | -1.335232 | -1.043389 | 2.569405 | 1.850168 | 5.0 |

df.at[dates[0],"A"]=0

df.iat[0,1]=0

df.loc[:,"D"]=np.array([5]*len(df))

df

| A | B | C | D | F | |

|---|---|---|---|---|---|

| 2013-01-01 | 0.000000 | 0.000000 | -1.240960 | 5 | NaN |

| 2013-01-02 | 0.747014 | -0.098745 | -2.674977 | 5 | 1.0 |

| 2013-01-03 | 0.344874 | -1.071516 | 0.138593 | 5 | 2.0 |

| 2013-01-04 | 0.032506 | 0.100209 | -0.258473 | 5 | 3.0 |

| 2013-01-05 | 0.141728 | 2.327885 | 1.172082 | 5 | 4.0 |

| 2013-01-06 | -1.335232 | -1.043389 | 2.569405 | 5 | 5.0 |

df2=df.copy()

df2[df2>0] =-df2

df2

| A | B | C | D | F | |

|---|---|---|---|---|---|

| 2013-01-01 | 0.000000 | 0.000000 | -1.240960 | -5 | NaN |

| 2013-01-02 | -0.747014 | -0.098745 | -2.674977 | -5 | -1.0 |

| 2013-01-03 | -0.344874 | -1.071516 | -0.138593 | -5 | -2.0 |

| 2013-01-04 | -0.032506 | -0.100209 | -0.258473 | -5 | -3.0 |

| 2013-01-05 | -0.141728 | -2.327885 | -1.172082 | -5 | -4.0 |

| 2013-01-06 | -1.335232 | -1.043389 | -2.569405 | -5 | -5.0 |

Missing data

df1=df.reindex(index=dates[0:4],columns=list(df.columns)+["E"])

df1.loc[dates[0]:dates[1],"E"]=1

df1 #reindexing: to change,add,delete the index on a specified axis

| A | B | C | D | F | E | |

|---|---|---|---|---|---|---|

| 2013-01-01 | 0.000000 | 0.000000 | -1.240960 | 5 | NaN | 1.0 |

| 2013-01-02 | 0.747014 | -0.098745 | -2.674977 | 5 | 1.0 | 1.0 |

| 2013-01-03 | 0.344874 | -1.071516 | 0.138593 | 5 | 2.0 | NaN |

| 2013-01-04 | 0.032506 | 0.100209 | -0.258473 | 5 | 3.0 | NaN |

df1.dropna(how="any") #drop any rows that have missing data

| A | B | C | D | F | E | |

|---|---|---|---|---|---|---|

| 2013-01-02 | 0.747014 | -0.098745 | -2.674977 | 5 | 1.0 | 1.0 |

df1.fillna(value=5)

| A | B | C | D | F | E | |

|---|---|---|---|---|---|---|

| 2013-01-01 | 0.000000 | 0.000000 | -1.240960 | 5 | 5.0 | 1.0 |

| 2013-01-02 | 0.747014 | -0.098745 | -2.674977 | 5 | 1.0 | 1.0 |

| 2013-01-03 | 0.344874 | -1.071516 | 0.138593 | 5 | 2.0 | 5.0 |

| 2013-01-04 | 0.032506 | 0.100209 | -0.258473 | 5 | 3.0 | 5.0 |

pd.isna(df1)

| A | B | C | D | F | E | |

|---|---|---|---|---|---|---|

| 2013-01-01 | False | False | False | False | True | False |

| 2013-01-02 | False | False | False | False | False | False |

| 2013-01-03 | False | False | False | False | False | True |

| 2013-01-04 | False | False | False | False | False | True |

Operations

df.mean() #stats

A -0.011518

B 0.035741

C -0.049055

D 5.000000

F 3.000000

dtype: float64

df.mean(1) #axis=1,row

2013-01-01 0.939760

2013-01-02 0.794659

2013-01-03 1.282390

2013-01-04 1.574848

2013-01-05 2.528339

2013-01-06 2.038157

Freq: D, dtype: float64

df.mean(0) #axis=0,columns

A -0.011518

B 0.035741

C -0.049055

D 5.000000

F 3.000000

dtype: float64

s=pd.Series([1,3,5,np.nan,6,8],index=dates).shift(2) #shft: move forward 2

s

2013-01-01 NaN

2013-01-02 NaN

2013-01-03 1.0

2013-01-04 3.0

2013-01-05 5.0

2013-01-06 NaN

Freq: D, dtype: float64

df.sub(s,axis="index")

| A | B | C | D | F | |

|---|---|---|---|---|---|

| 2013-01-01 | NaN | NaN | NaN | NaN | NaN |

| 2013-01-02 | NaN | NaN | NaN | NaN | NaN |

| 2013-01-03 | -0.655126 | -2.071516 | -0.861407 | 4.0 | 1.0 |

| 2013-01-04 | -2.967494 | -2.899791 | -3.258473 | 2.0 | 0.0 |

| 2013-01-05 | -4.858272 | -2.672115 | -3.827918 | 0.0 | -1.0 |

| 2013-01-06 | NaN | NaN | NaN | NaN | NaN |

df

| A | B | C | D | F | |

|---|---|---|---|---|---|

| 2013-01-01 | 0.000000 | 0.000000 | -1.240960 | 5 | NaN |

| 2013-01-02 | 0.747014 | -0.098745 | -2.674977 | 5 | 1.0 |

| 2013-01-03 | 0.344874 | -1.071516 | 0.138593 | 5 | 2.0 |

| 2013-01-04 | 0.032506 | 0.100209 | -0.258473 | 5 | 3.0 |

| 2013-01-05 | 0.141728 | 2.327885 | 1.172082 | 5 | 4.0 |

| 2013-01-06 | -1.335232 | -1.043389 | 2.569405 | 5 | 5.0 |

df.apply(np.cumsum) #cumulative

| A | B | C | D | F | |

|---|---|---|---|---|---|

| 2013-01-01 | 0.000000 | 0.000000 | -1.240960 | 5 | NaN |

| 2013-01-02 | 0.747014 | -0.098745 | -3.915937 | 10 | 1.0 |

| 2013-01-03 | 1.091887 | -1.170260 | -3.777344 | 15 | 3.0 |

| 2013-01-04 | 1.124393 | -1.070051 | -4.035818 | 20 | 6.0 |

| 2013-01-05 | 1.266121 | 1.257834 | -2.863735 | 25 | 10.0 |

| 2013-01-06 | -0.069110 | 0.214444 | -0.294330 | 30 | 15.0 |

df.apply(lambda x: x.max() -x.min()) #apply

A 2.082246

B 3.399400

C 5.244382

D 0.000000

F 4.000000

dtype: float64

s=pd.Series(np.random.randint(0,7,size=10)) #histogramming

s

0 5

1 0

2 5

3 5

4 3

5 1

6 4

7 3

8 6

9 3

dtype: int32

s.value_counts().sort_index() #add sort_index()

0 1

1 1

3 3

4 1

5 3

6 1

dtype: int64

String Methods

s=pd.Series(["A","B","C","Aaba","Baca",np.nan,"CABA","dog","cat"])

s.str.lower()

0 a

1 b

2 c

3 aaba

4 baca

5 NaN

6 caba

7 dog

8 cat

dtype: object

Merge

df=pd.DataFrame(np.random.randn(10,4)) #concat

df

| 0 | 1 | 2 | 3 | |

|---|---|---|---|---|

| 0 | -0.025866 | -0.178967 | -0.808695 | 0.927172 |

| 1 | -0.842495 | 0.707233 | 0.222576 | 0.455422 |

| 2 | -0.328742 | -1.270708 | -0.508805 | -0.018087 |

| 3 | 1.272699 | -1.671005 | -1.107406 | 0.243692 |

| 4 | -1.867842 | 0.893841 | -1.515523 | -1.106920 |

| 5 | -0.588635 | -0.480732 | 0.034501 | 0.348179 |

| 6 | 0.270778 | 0.133031 | -1.371957 | -2.978966 |

| 7 | 0.650808 | 0.742551 | -2.189231 | -0.465399 |

| 8 | -0.919177 | 1.063680 | -0.075367 | -0.147211 |

| 9 | -0.288339 | -0.125634 | 0.758688 | -0.080836 |

pieces=[df[:3],df[3:7],df[7:]]

pd.concat(pieces)

| 0 | 1 | 2 | 3 | |

|---|---|---|---|---|

| 0 | -0.025866 | -0.178967 | -0.808695 | 0.927172 |

| 1 | -0.842495 | 0.707233 | 0.222576 | 0.455422 |

| 2 | -0.328742 | -1.270708 | -0.508805 | -0.018087 |

| 3 | 1.272699 | -1.671005 | -1.107406 | 0.243692 |

| 4 | -1.867842 | 0.893841 | -1.515523 | -1.106920 |

| 5 | -0.588635 | -0.480732 | 0.034501 | 0.348179 |

| 6 | 0.270778 | 0.133031 | -1.371957 | -2.978966 |

| 7 | 0.650808 | 0.742551 | -2.189231 | -0.465399 |

| 8 | -0.919177 | 1.063680 | -0.075367 | -0.147211 |

| 9 | -0.288339 | -0.125634 | 0.758688 | -0.080836 |

left=pd.DataFrame({"key": ["foo","foo"],"lval":[1,2]})

right=pd.DataFrame({"key": ["foo","foo"],"rval":[4,5]})

print(left)

print(right)

key lval

0 foo 1

1 foo 2

key rval

0 foo 4

1 foo 5

pd.merge(left,right,on="key") #SQL style merge

| key | lval | rval | |

|---|---|---|---|

| 0 | foo | 1 | 4 |

| 1 | foo | 1 | 5 |

| 2 | foo | 2 | 4 |

| 3 | foo | 2 | 5 |

left=pd.DataFrame({"key": ["foo","bar"],"lval":[1,2]})

right=pd.DataFrame({"key": ["foo","bar"],"rval":[4,5]})

print(left)

print(right)

key lval

0 foo 1

1 bar 2

key rval

0 foo 4

1 bar 5

pd.merge(left,right, on="key")

| key | lval | rval | |

|---|---|---|---|

| 0 | foo | 1 | 4 |

| 1 | bar | 2 | 5 |

Grouping

df=pd.DataFrame({"A":["foo","bar","foo","bar","foo","bar","foo","foo"],

"B":["one","one","two","three","two","two","one","three"],

"C": np.random.randn(8),

"D": np.random.randn(8)

}

)

df

| A | B | C | D | |

|---|---|---|---|---|

| 0 | foo | one | 1.048840 | -0.833822 |

| 1 | bar | one | -1.137147 | -2.027540 |

| 2 | foo | two | -0.657997 | 0.608757 |

| 3 | bar | three | -0.413567 | -0.753453 |

| 4 | foo | two | -0.454805 | -1.496538 |

| 5 | bar | two | 0.493420 | -0.384181 |

| 6 | foo | one | -0.561297 | 1.679049 |

| 7 | foo | three | 1.841777 | 0.482405 |

df.groupby("A").sum()

| C | D | |

|---|---|---|

| A | ||

| bar | -1.057294 | -3.165174 |

| foo | 1.216518 | 0.439850 |

df.groupby(["A","B"]).sum()

| C | D | ||

|---|---|---|---|

| A | B | ||

| bar | one | -1.137147 | -2.027540 |

| three | -0.413567 | -0.753453 | |

| two | 0.493420 | -0.384181 | |

| foo | one | 0.487543 | 0.845226 |

| three | 1.841777 | 0.482405 | |

| two | -1.112803 | -0.887780 |

Reshaping

tuples = list(

zip(

*[

["bar", "bar", "baz", "baz", "foo", "foo", "qux", "qux"],

["one", "two", "one", "two", "one", "two", "one", "two"],

]

)

)

index= pd.MultiIndex.from_tuples(tuples,names=["first","second"])

df=pd.DataFrame(np.random.randn(8,2),index=index,columns=["A","B"])

df2=df[:4]

df2

| A | B | ||

|---|---|---|---|

| first | second | ||

| bar | one | 0.890952 | -0.375587 |

| two | 1.001416 | -1.333297 | |

| baz | one | -0.562930 | -0.951540 |

| two | 0.273894 | -0.832586 |

stacked=df2.stack()

stacked #compresse a level in DataFrame's columns

first second

bar one A 0.890952

B -0.375587

two A 1.001416

B -1.333297

baz one A -0.562930

B -0.951540

two A 0.273894

B -0.832586

dtype: float64

stacked.unstack() # inverse operation of stack()

| A | B | ||

|---|---|---|---|

| first | second | ||

| bar | one | 0.890952 | -0.375587 |

| two | 1.001416 | -1.333297 | |

| baz | one | -0.562930 | -0.951540 |

| two | 0.273894 | -0.832586 |

print(stacked.unstack(0))

print(stacked.unstack(1))

first bar baz

second

one A 0.890952 -0.562930

B -0.375587 -0.951540

two A 1.001416 0.273894

B -1.333297 -0.832586

second one two

first

bar A 0.890952 1.001416

B -0.375587 -1.333297

baz A -0.562930 0.273894

B -0.951540 -0.832586

Pivot tables

df=pd.DataFrame({

"A": ["one", "one", "two", "three"] * 3,

"B": ["A", "B", "C"] * 4,

"C": ["foo", "foo", "foo", "bar", "bar", "bar"] * 2,

"D": np.random.randn(12),

"E": np.random.randn(12),

}

)

df

| A | B | C | D | E | |

|---|---|---|---|---|---|

| 0 | one | A | foo | -0.373453 | -1.406135 |

| 1 | one | B | foo | 1.264894 | -1.389622 |

| 2 | two | C | foo | -0.242048 | -1.593581 |

| 3 | three | A | bar | 2.716601 | 1.612520 |

| 4 | one | B | bar | -1.528681 | 0.602288 |

| 5 | one | C | bar | -0.074750 | 2.044902 |

| 6 | two | A | foo | 0.110851 | -0.740685 |

| 7 | three | B | foo | -0.915239 | 0.589600 |

| 8 | one | C | foo | 0.377855 | -0.094179 |

| 9 | one | A | bar | -0.965858 | -1.770097 |

| 10 | two | B | bar | -0.943708 | 0.623095 |

| 11 | three | C | bar | -0.096407 | -0.637673 |

pd.pivot_table(df,values="D",index=["A","B"],columns=["C"])

| C | bar | foo | |

|---|---|---|---|

| A | B | ||

| one | A | -0.965858 | -0.373453 |

| B | -1.528681 | 1.264894 | |

| C | -0.074750 | 0.377855 | |

| three | A | 2.716601 | NaN |

| B | NaN | -0.915239 | |

| C | -0.096407 | NaN | |

| two | A | NaN | 0.110851 |

| B | -0.943708 | NaN | |

| C | NaN | -0.242048 |

Time series

rng = pd.date_range("1/1/2012", periods=100, freq="S")

ts = pd.Series(np.random.randint(0, 500, len(rng)), index=rng)

ts.resample("5Min").sum() #sum every 5 mins

2012-01-01 26705

Freq: 5T, dtype: int32

ts.resample("1Min").sum() #sum every 1 min

2012-01-01 00:00:00 15006

2012-01-01 00:01:00 11699

Freq: T, dtype: int32

rng = pd.date_range("3/6/2012 00:00", periods=5, freq="D")

ts = pd.Series(np.random.randn(len(rng)), rng)

ts

2012-03-06 1.385727

2012-03-07 -1.201650

2012-03-08 -0.135337

2012-03-09 -0.287460

2012-03-10 0.454925

Freq: D, dtype: float64

ts_utc = ts.tz_localize("UTC")

ts_utc

2012-03-06 00:00:00+00:00 1.385727

2012-03-07 00:00:00+00:00 -1.201650

2012-03-08 00:00:00+00:00 -0.135337

2012-03-09 00:00:00+00:00 -0.287460

2012-03-10 00:00:00+00:00 0.454925

Freq: D, dtype: float64

ts_utc.tz_convert("US/Eastern") #covertion to another time zone

2012-03-05 19:00:00-05:00 1.385727

2012-03-06 19:00:00-05:00 -1.201650

2012-03-07 19:00:00-05:00 -0.135337

2012-03-08 19:00:00-05:00 -0.287460

2012-03-09 19:00:00-05:00 0.454925

Freq: D, dtype: float64

rng = pd.date_range("1/1/2012", periods=5, freq="M")

ts = pd.Series(np.random.randn(len(rng)), index=rng)

ts

2012-01-31 0.252942

2012-02-29 -0.028175

2012-03-31 -0.639577

2012-04-30 -2.170122

2012-05-31 0.290485

Freq: M, dtype: float64

ps=ts.to_period()

ps

2012-01 0.252942

2012-02 -0.028175

2012-03 -0.639577

2012-04 -2.170122

2012-05 0.290485

Freq: M, dtype: float64

ps.to_timestamp()

2012-01-01 0.252942

2012-02-01 -0.028175

2012-03-01 -0.639577

2012-04-01 -2.170122

2012-05-01 0.290485

Freq: MS, dtype: float64

prng = pd.period_range("1990Q1", "2000Q4", freq="Q-NOV")

ts = pd.Series(np.random.randn(len(prng)), prng)

ts.index = (prng.asfreq("M", "e") + 1).asfreq("H", "s") + 9

ts.head()

1990-03-01 09:00 0.747117

1990-06-01 09:00 1.203087

1990-09-01 09:00 -0.941095

1990-12-01 09:00 -0.126587

1991-03-01 09:00 -1.061227

Freq: H, dtype: float64

Categoricals

df = pd.DataFrame(

{"id": [1, 2, 3, 4, 5, 6], "raw_grade": ["a", "b", "b", "a", "a", "e"]}

)

df

| id | raw_grade | |

|---|---|---|

| 0 | 1 | a |

| 1 | 2 | b |

| 2 | 3 | b |

| 3 | 4 | a |

| 4 | 5 | a |

| 5 | 6 | e |

df["grade"] = df["raw_grade"].astype("category")

df["grade"]

0 a

1 b

2 b

3 a

4 a

5 e

Name: grade, dtype: category

Categories (3, object): ['a', 'b', 'e']

df["grade"]=df["grade"].cat.set_categories(["very good", "good", "very bad"])

df["grade"] #rename the categories

0 NaN

1 NaN

2 NaN

3 NaN

4 NaN

5 NaN

Name: grade, dtype: category

Categories (3, object): ['very good', 'good', 'very bad']

df.sort_values(by="grade")

| id | raw_grade | grade | |

|---|---|---|---|

| 0 | 1 | a | NaN |

| 1 | 2 | b | NaN |

| 2 | 3 | b | NaN |

| 3 | 4 | a | NaN |

| 4 | 5 | a | NaN |

| 5 | 6 | e | NaN |

df.groupby("grade").size()

grade

very good 0

good 0

very bad 0

dtype: int64

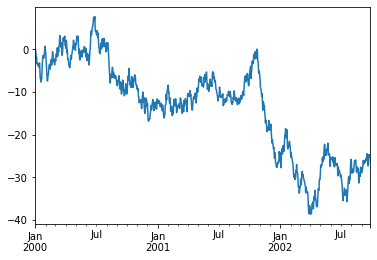

Plotting

import matplotlib.pyplot as plt

plt.close("all") #close a figure window

ts = pd.Series(np.random.randn(1000), index=pd.date_range("1/1/2000", periods=1000))

ts = ts.cumsum()

ts.plot();

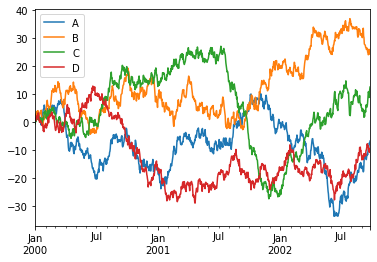

df=pd.DataFrame(

np.random.randn(1000, 4), index=ts.index, columns=["A", "B", "C", "D"]

)

df=df.cumsum()

plt.figure();

<Figure size 432x288 with 0 Axes>

df.plot();

plt.legend(loc='best');

Getting data in/out (csv)

df.to_csv("foo.csv") #writing to c csv file

pd.read_csv("foo.csv") #reading from a csv file

| Unnamed: 0 | A | B | C | D | |

|---|---|---|---|---|---|

| 0 | 2000-01-01 | 1.783768 | 2.045073 | -0.507718 | 1.123060 |

| 1 | 2000-01-02 | 0.196039 | 2.711749 | 0.416838 | 1.080363 |

| 2 | 2000-01-03 | 1.208280 | 1.600025 | 0.633446 | 1.539429 |

| 3 | 2000-01-04 | 2.404634 | 3.237997 | 0.450288 | 0.621748 |

| 4 | 2000-01-05 | 1.188598 | 3.202251 | 1.098251 | 1.717754 |

| ... | ... | ... | ... | ... | ... |

| 995 | 2002-09-22 | -9.342127 | 24.392143 | 10.564637 | -10.164637 |

| 996 | 2002-09-23 | -8.419079 | 26.097426 | 8.878737 | -9.608197 |

| 997 | 2002-09-24 | -7.336001 | 25.781776 | 10.905896 | -10.742856 |

| 998 | 2002-09-25 | -7.736148 | 25.178845 | 11.460886 | -10.487685 |

| 999 | 2002-09-26 | -6.694487 | 24.414987 | 12.606476 | -9.257457 |

1000 rows × 5 columns

HDF5

df.to_hdf("foo.h5", "df")

pd.read_hdf("foo.h5", "df")

Excel

df.to_excel("foo.xlsx", sheet_name="Sheet1")

pd.read_excel("foo.xlsx", "Sheet1", index_col=None, na_values=["NA"])

Gotchas

if pd.Series([False, True, False]):

print("I was True")

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

~\AppData\Local\Temp/ipykernel_12628/2697453150.py in <module>

----> 1 if pd.Series([False, True, False]):

2 print("I was True")

~\miniconda3\envs\cal2020\lib\site-packages\pandas\core\generic.py in __nonzero__(self)

1536 def __nonzero__(self):

1537 raise ValueError(

-> 1538 f"The truth value of a {type(self).__name__} is ambiguous. "

1539 "Use a.empty, a.bool(), a.item(), a.any() or a.all()."

1540 )

ValueError: The truth value of a Series is ambiguous. Use a.empty, a.bool(), a.item(), a.any() or a.all().

if pd.Series([False, True, False]) is not None:

print("I was True")

I was True

if pd.Series([False, False, False]) is not None:

print("I was True") <BR/>

##None is not definition

I was True